-

Using script variables in Azure Release Pipelines

Azure Pipeline still has 'classic' Release Pipelines. It has some limitations compared to the newer YAML-based deployment jobs, but sometimes the visualisation it provides for both pipeline design and deployment progress is still preferred.

I recently wanted to make a task in a release pipeline conditional on the value of a variable set in an earlier script task, but I wasn't quite sure how to reference the variable in the conditional expression.

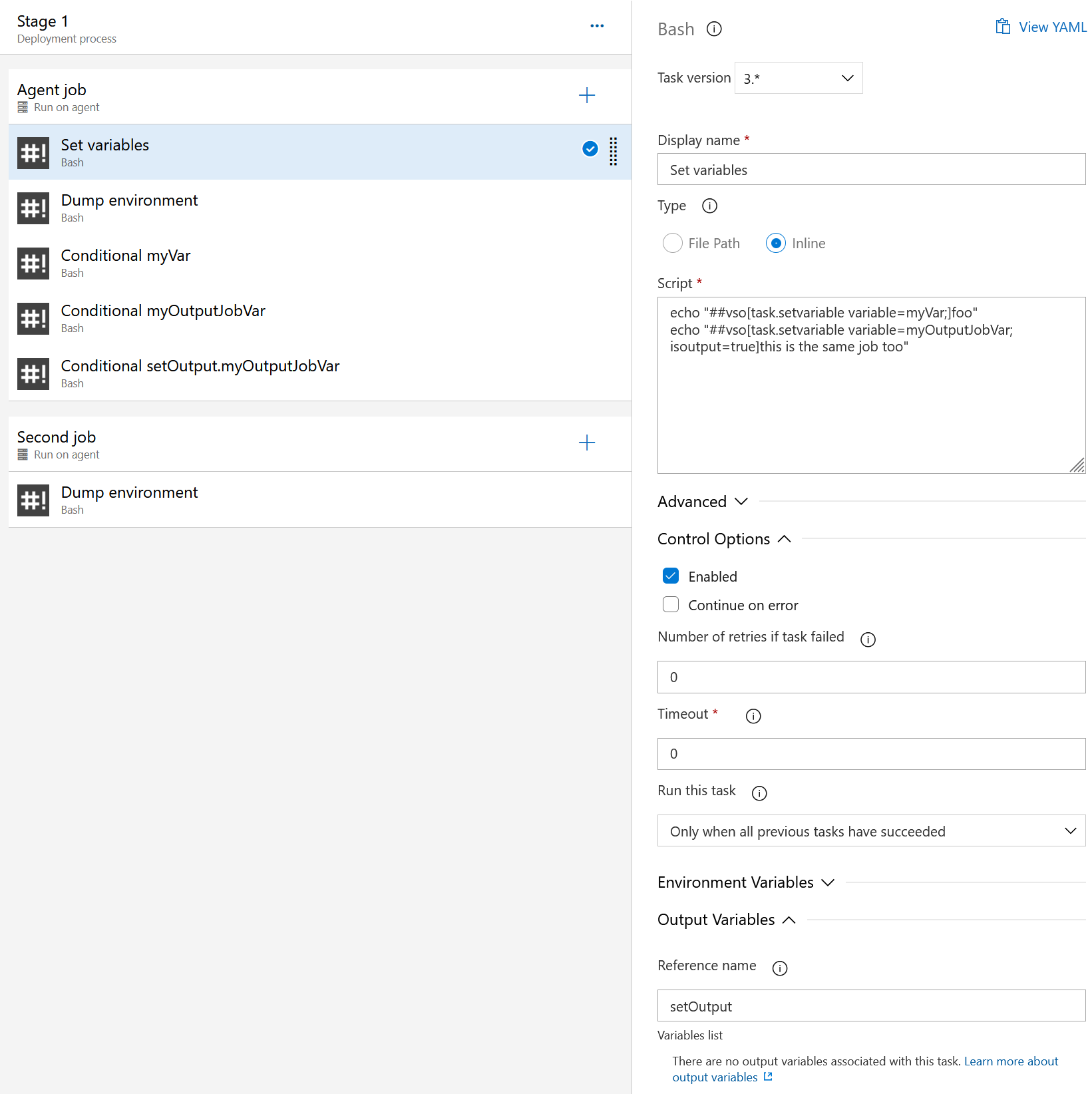

To figure it out, I did a little experiment. I started with a script task that contained this content:

echo "##vso[task.setvariable variable=myJobVar]this is the same job" echo "##vso[task.setvariable variable=myOutputJobVar;isoutput=true]this is the same job too"The script sets two variables, the second being an 'output' variable.

In the Output Variables section, the script task also sets the Reference name to

setOutput.

I then created some additional script tasks, and under the Control Options section, change Run this task to

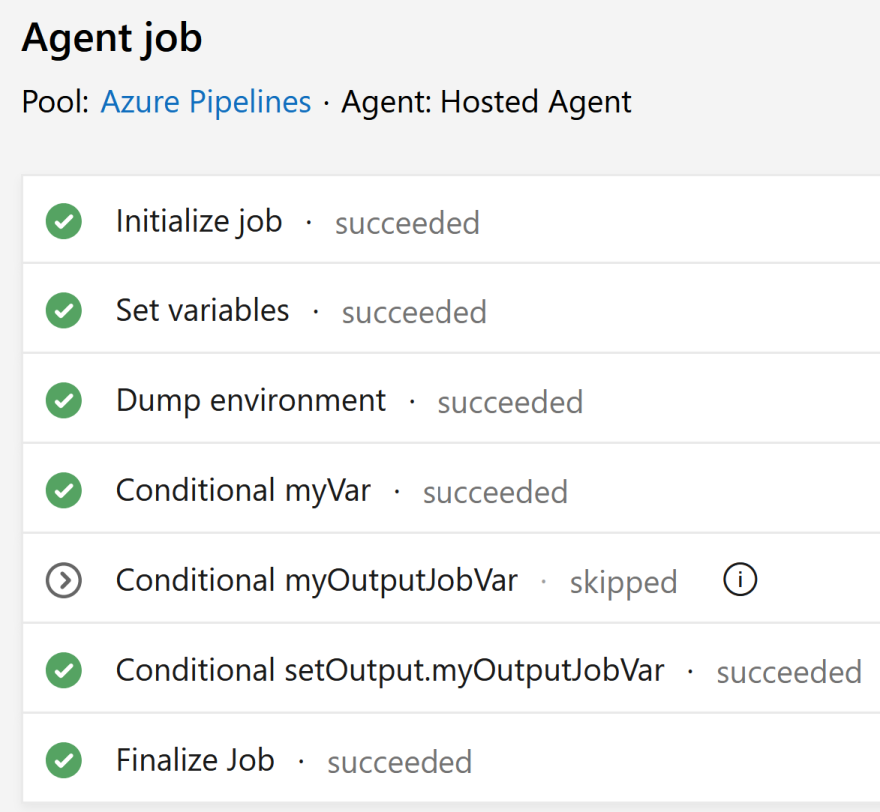

Custom conditions, and then entered one of these expressions in each:and(succeeded(), ne(variables.myVar, '')) and(succeeded(), ne(variables['myOutputJobVar'], '')) and(succeeded(), ne(variables['setOutput.myOutputJobVar'], ''))Note that as we're working with conditions and expressions, we use the runtime expression syntax to reference variables.

So from this, I can conclude:

- For a regular variable, use

variables.myVar, where the name of the variable wasmyVar - For an output variable, use

variables['setOutput.myOutputJobVar'], where the name of the variable wasmyOutputJobVarand the task had a reference name ofsetOutput.

What about referencing a variable from a subsequent job?

Unfortunately, this is not possible with classic release pipelines.

The closest you can get to this is a hack involving using the Azure DevOps API to update a predefined release definition variable.

Output variables are primarily intended to allow consumption in downstream jobs and stages. Given this is not possible with release pipelines, there's no real advantage to creating output variables in your scripts. To keep things simple just define them as regular variables.

- For a regular variable, use

-

SixPivot Summit 2022

I started working at SixPivot back in February 2021. Given the state of the pandemic, all my interactions were done online. But I was hoping it wouldn't be too long before I got to meet all the other 'Pivots' (as SixPivot folks call ourselves). 18 months later, the wait was a little longer than I'd thought it would be.

So with all that pent-up anticipation, did the in-person SixPivot Summit meet expectations?

YES!

The summit was held at Peppers Salt Resort and Spa Kingscliff, which is just south of the Queensland/NSW border. A very nice place to visit.

I flew out of Adelaide on a cold and wintery morning and arrived a couple of hours later at Gold Coast Airport, where it was also overcast and raining! Not quite as cold though.

Friday afternoon was settling in, and then a pleasant walk down to the local lawn bowls club for a few ends of social bowling with most of the other pivots and their families, followed by dinner nearby at the local surf club.

Saturday was the summit proper. A full day of sessions from the SixPivot leadership team, but very much in an interactive and open format. The openness is something I definitely appreciate.

I remember thinking during the afternoon, "I really feel like I've found my work 'home'". A sense of shared values and purpose, but still with a range of opinions, experiences and perspectives. That had certainly been my sense over the last 18 months, but it was great to have it confirmed in person.

Saturday night staff and their families were all invited to a dinner held at the resort. Great food and more time to spend getting to know everyone.

Sunday was our own time, with my flight back home in the early afternoon. Clear skies had returned, so I enjoyed a quick walk along the beach before breakfast. Afterwards, (and being a Sunday), I watched my local church service from the comfort of my hotel room. Handy that they live stream on YouTube!

There were still plenty of Pivots around so I took the opportunity for more chatting and saying farewell to those leaving on earlier flights. Then a friendly taxi ride back to the airport and an uneventful flight back home to Adelaide.

It was a great weekend. I definitely feel more connected with, and have a better understanding of my colleagues. I'm looking forward to our next opportunity to come together.

-

Building and using Azure Pipelines Container Jobs

The big advantage of using self-hosted build agents with a build pipeline is that you can benefit from installing specific tools and libraries, as well as caching packages between builds.

This can also be a big disadvantage - for example, if a build before yours decided to install Node version 10, but your build assumes you have Node 14, then you're in for a potentially nasty surprise when you add an NPM package that specifies it requires Node > 10.

An advantage of Microsoft-hosted build agents (for Azure Pipelines or GitHub Actions) is every build job gets a fresh build environment. While they do come with pre-installed software, you're free to modify and update to your requirements, without fear that you'll impact any subsequent build jobs.

The downside of these agents is that any prerequisites you need for your build have to be installed each time your build job runs. The Cache task might help with efficiently restoring packages, but it probably won't have much impact on installing your toolchain.

This can be where Container Jobs come into play.

Whilst this post focuses on Azure Pipelines, GitHub Actions also supports running jobs in a container too, so the same principles will apply.

Container jobs

Containers provide isolation from the host and allow you to pin specific versions of tools and dependencies. Host jobs require less initial setup and infrastructure to maintain.

You could use a public Docker image, like

ubuntu:20.04, or you could create your custom image that includes additional prerequisites.To make a job into a container job, you add a

container, like this:container: ubuntu:20.04 steps: - script: printenvWith this in place, when the job starts, the image is automatically downloaded, a container using the image is started, and (by default) all the steps for the job are run in the context of the container.

Note that it's now up to you to ensure that the image you've selected for your container job has all the tools required by all the tasks in the job. For example, the

ubuntu:20.04image doesn't include PowerShell, so if I had tried to use aPowerShell@2task, it would fail (as it couldn't findpwsh)If the particular toolchain you require is closely tied to the source code you're building (eg. C# 10 source code will require at least .NET 6 SDK), then I think there's a strong argument for versioning the toolchain alongside your source code. But if your toolchain definition is in the same source code repository as your application code, how do you efficiently generate the toolchain image that can be used to build the application?

The approach I've adopted is to have a pipeline with two jobs. The first job is just responsible for maintaining the toolchain image (aka building the Docker image).

The second job uses this Docker image (as a container job) to provide the environment used to build the application.

Building the toolchain image can be time-consuming. I want my builds to finish as quickly as possible. It would be ideal if we could somehow completely skip (or minimise) the work for this if there were no changes to the toolchain since the last time we built it.

I've applied two techniques to help with this:

-cache-from

If your agent is self-hosted, then Docker builds can benefit from layer caching. A Microsoft-hosted agent is new for every build, so there's no layer cache from the previous build.

docker buildhas a-cache-fromoption. This allows you to reference another image that may have layers that be used as a cache source. The ideal cache source for an image would be the previous version of the same image.For an image to be used as a cache source, it needs extra metadata included in the image when it is created. This is done by adding a build argument

--build-arg BUILDKIT_INLINE_CACHE=1, and ensuring we use BuildKit by defining an environment variableDOCKER_BUILDKIT: 1.Here's the build log showing how because the layers in the cache were a match, they were used rather than the related commands in the Dockerfile needing to be re-executed.

#4 importing cache manifest from ghcr.io/***/azure-pipelines-container-jobs:latest #4 sha256:3445b7dd16dde89921d292ac2521908957fc490da1e463b78fcce347ed21c808 #4 DONE 0.9s #5 [2/2] RUN apk add --no-cache --virtual .pipeline-deps readline linux-pam && apk --no-cache add bash sudo shadow && apk del .pipeline-deps && apk add --no-cache icu-libs krb5-libs libgcc libintl libssl1.1 libstdc++ zlib && apk add --no-cache libgdiplus --repository https://dl-3.alpinelinux.org/alpine/edge/testing/ && apk add --no-cache ca-certificates less ncurses-terminfo-base tzdata userspace-rcu curl && curl -sSL https://dot.net/v1/dotnet-install.sh | bash /dev/stdin -Version 6.0.302 -InstallDir /usr/share/dotnet && ln -s /usr/share/dotnet/dotnet /usr/bin/dotnet && apk -X https://dl-cdn.alpinelinux.org/alpine/edge/main add --no-cache lttng-ust && curl -L https://github.com/PowerShell/PowerShell/releases/download/v7.2.5/powershell-7.2.5-linux-alpine-x64.tar.gz -o /tmp/powershell.tar.gz && mkdir -p /opt/microsoft/powershell/7 && tar zxf /tmp/powershell.tar.gz -C /opt/microsoft/powershell/7 && chmod +x /opt/microsoft/powershell/7/pwsh && ln -s /opt/microsoft/powershell/7/pwsh /usr/bin/pwsh && pwsh --version #5 sha256:675dec3a2cb67748225cf1d9b8c87ef1218f51a4411387b5e6a272ce4955106e #5 pulling sha256:43a07455240a0981bdafd48aacc61d292fa6920f16840ba9b0bba85a69222156 #5 pulling sha256:43a07455240a0981bdafd48aacc61d292fa6920f16840ba9b0bba85a69222156 4.6s done #5 CACHED #8 exporting to image #8 sha256:e8c613e07b0b7ff33893b694f7759a10d42e180f2b4dc349fb57dc6b71dcab00 #8 exporting layers done #8 writing image sha256:7b0a67e798b367854770516f923ab7f534bf027b7fb449765bf26a9b87001feb done #8 naming to ghcr.io/***/azure-pipelines-container-jobs:latest done #8 DONE 0.0sSkip if nothing to do

A second thing we can do is figure out if we actually need to rebuild the image in the first place. If the files in the directory where the Dockerfile is located haven't changed, then we can just skip all the remaining steps in the job!

This is calculated by a PowerShell script, originally from this GitHub Gist.

Working example

I've created a sample project at https://github.com/flcdrg/azure-pipelines-container-jobs that demonstrates this approach in action.

In particular, note the following files:

Some rough times I saw for the 'Build Docker Image' job:

Phase Time (seconds) Initial build 99 Incremental change 32 No change 8 Additional considerations

The sample project works nicely, but if you did want to use this in a project that made use of pull request builds, you might want to adjust the logic slightly.

Docker images with the

latestversion should be preserved for use withmainbuilds, or PR builds that don't modify the image.A PR build that includes changes to the Docker image should use that modified image, but no other builds should use that image until it has been merged into

main.Is this right for me?

Measuring the costs and benefits of this approach is essential. If the time spent building the image, as well as the time to download the image for the container job exceeds the time to build without a container, then using a container job for performance alone doesn't make sense.

Even if you're adopting container jobs primarily for the isolation they bring to your builds, it's useful to understand any performance impacts on your build times.

Summary

In this post, we saw how container jobs can make a build pipeline more reliable, and potential improvements in time to build completion.