-

STEPtember 2021

It's the last day of September and also the last day of "STEPtember"!

STEPtember is a fundraiser to support people with, and research into cerebral palsy. Participants are asked to take at least 10,000 steps each day of the month of September.

I found out about STEPtember through my colleagues at SixPivot, and signed up with a team to participate this year. Since I've been working from home I do try and take a regular morning walk before starting work - but I found that I needed to do a bit more than that (like cycling, gardening or other kinds of exercise) to make it over the 10,000 threshold. I do hope the extra steps are benefiting my health, as well as any donations going to a great cause.

If you'd like to sponsor me to help me get to my individual fundraising target, head over to my STEPtember profile page (donations are tax deductible for Australians).

-

Azure Functions - Enable specific functions for debugging

I've been using Azure Functions quite a bit. Indeed I've been recently speaking about them and the new support for .NET 5 and soon .NET 6!

Today I wanted to debug an Azure Function application, but I didn't want all of the functions in the application to run (as some of them access resources that I don't have locally). I discovered that you can add a

functionsproperty to yourhost.jsonfile to list the specific functions that should run.eg.

{ "version": "2.0", "functions": [ "function1", "function2" ] }But I really would prefer not to edit

host.jsonas that file is under source control and I'd then need to remember to not commit those changes. I'd much prefer not to have to remember too many things!There's a section in the documentation that describes how you can also set these values in your

local.settings.json(which isn't usually under source control). But the examples given are for simple boolean properties. How do you enter the array value?To find out, I temporarily set the values in

host.jsonand used theIConfigurationRoot.GetDebugView()extension method to dump out all the configuration to see how they were represented.Here's the answer:

{ "Values": { "AzureFunctionsJobHost__functions__0": "function1", "AzureFunctionsJobHost__functions__1": "function2" } }The

__0and__1represent each element in the array. With that in place when I run the Azure Function locally, onlyfunctionandfunction2will run. All others will be ignored. Just add additional properties (incrementing the number) to enable more functions. -

GitHub Action build not running on main/master

Maybe I could call this 'The case of the Grumpy GitHub Action'?

I recently added the Auto-merge on a pull request workflow to my https://github.com/flcdrg/dependabot-lockfiles repository.

The idea being that when Dependabot creates a pull request to update a component, if you've set the Allow auto-merge option in the repository settings, then the pull request can be merged automatically assuming all requirements are met.

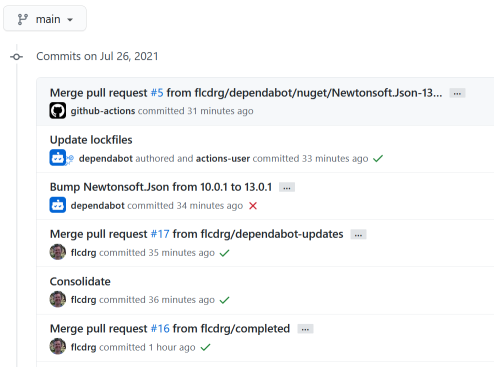

But I'd noticed after making that change, while builds were running correctly for pull requests, the merge commit didn't have a corresponding build!

My first thought was had I made a mistake in one of the workflows? But they were working for pull requests. If there was a typo it should have shown up there.

I then took a closer look at the builds that were working. Looking at the screenshot above, I'm expecting to see a green tick next to each merge commit (the commits labelled 'Merged pull request').

There's ones for the two pull requests that I created myself (my GitHub username is 'flcdrg'), but none for the most recent commit. And interestingly that merge commit says it's committed by 'github-actions'. Hmm.. I wonder if that's significant?

It reminded me of something I'd read previously.

When you use the repository's GITHUB_TOKEN to perform tasks on behalf of the GitHub Actions app, events triggered by the GITHUB_TOKEN will not create a new workflow run.

I began to form a hypothesis. The auto-merge is set by that workflow looks like this:

- name: Enable auto-merge for Dependabot PRs run: gh pr merge --auto --merge "$PR_URL" env: PR_URL: ${{github.event.pull_request.html_url}} GITHUB_TOKEN: ${{secrets.GITHUB_TOKEN}}It's using the GitHub CLI to configure the pull request to enable auto-merge. What I did notice is that it's passing through

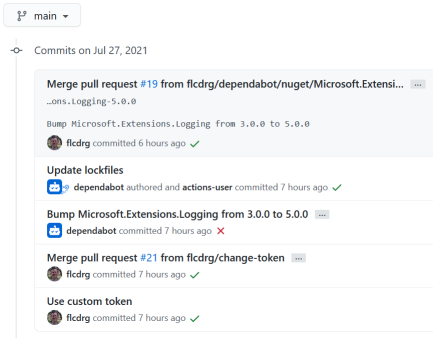

GITHUB_TOKENas an environment variable. On reflection, that kind of makes sense as if you recall the merge commit was 'committed' by 'github-actions'. I guess that's the username that is associated with GITHUB_TOKEN.I wondered whether changing the token might help.

I have a personal access token that I'd previously created with repository access. I added it as a secret named

PAT_REPO_FULLto this repository and updated the workflow to use${{secrets.PAT_REPO_FULL}}.

The next Dependabot pull request then gets merged and this time it shows the committer as me (as the token was for my account), and success, the build now runs correctly!