-

Provision an Azure Virtual Machine with Terraform Cloud

Sometimes I need to spin up a virtual machine to quickly test something out on a 'vanilla' machine, for example, to test out a Chocolatey package that I maintain.

Most of the time I log in to the Azure Portal and click around to create a VM. The addition of the choice to use the Azure Virtual Machine with preset configuration does make it a bit easier, but it's still lots of clicking. Maybe I should try automating this!

There are a few choices for automating, but seeing as I've been using Terraform lately I thought I'd try that out, together with Terraform Cloud. As I'll be putting the Terraform files in a public repository on GitHub, I can use the free tier for Terraform Cloud.

You can find the source for the Terraform files at https://github.com/flcdrg/terraform-azure-vm/.

You'll also need to have both the Azure CLI and Terraform CLI installed. You can do this easily via Chocolatey:

choco install terraform choco install azure-cliSetting up Terraform Cloud Workspace with GitHub

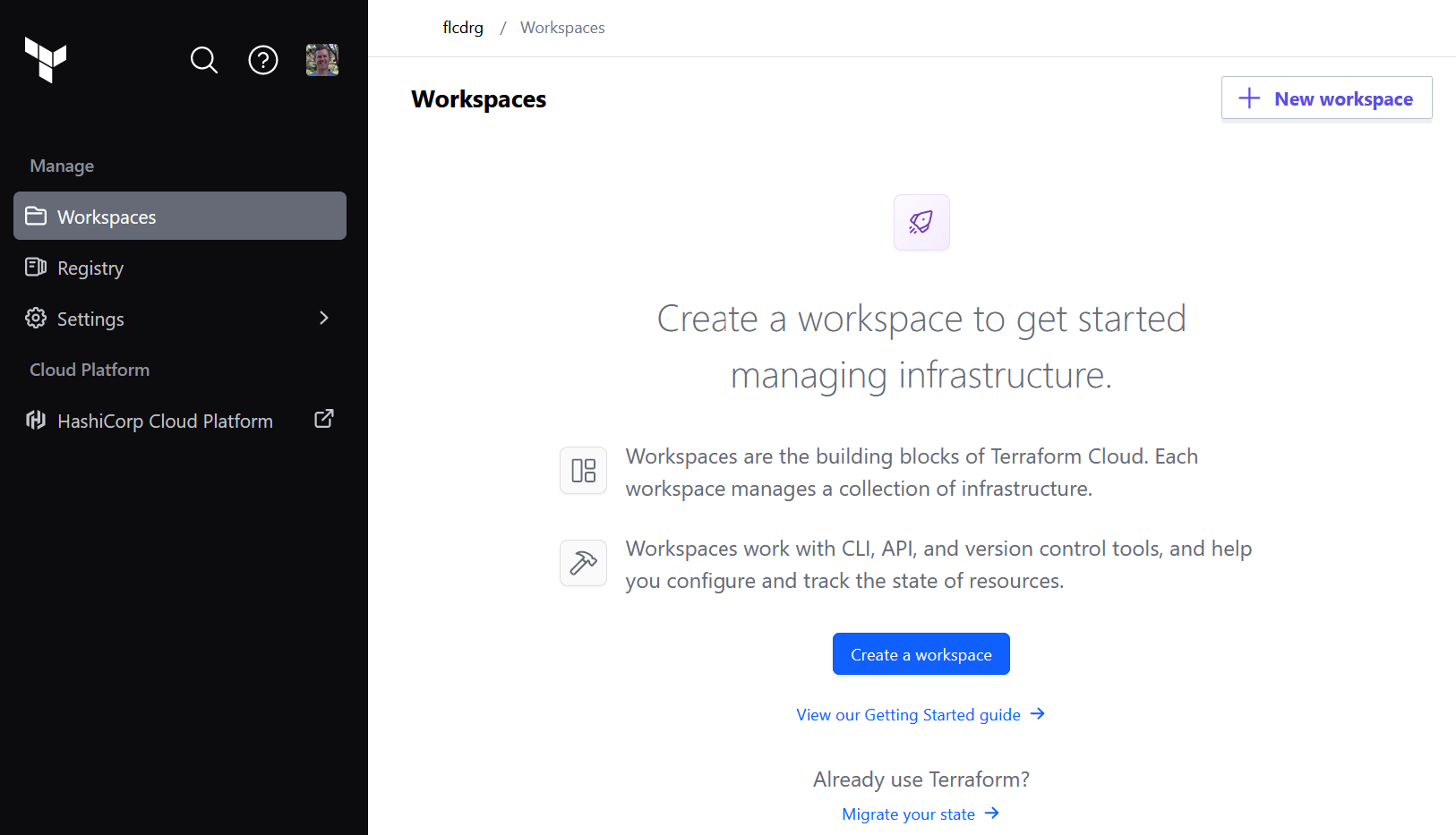

- Log in (or sign up) to Terraform Cloud at https://app.terraform.io, select (or create) your organisation, then go to Workspaces and click on Create a workspace

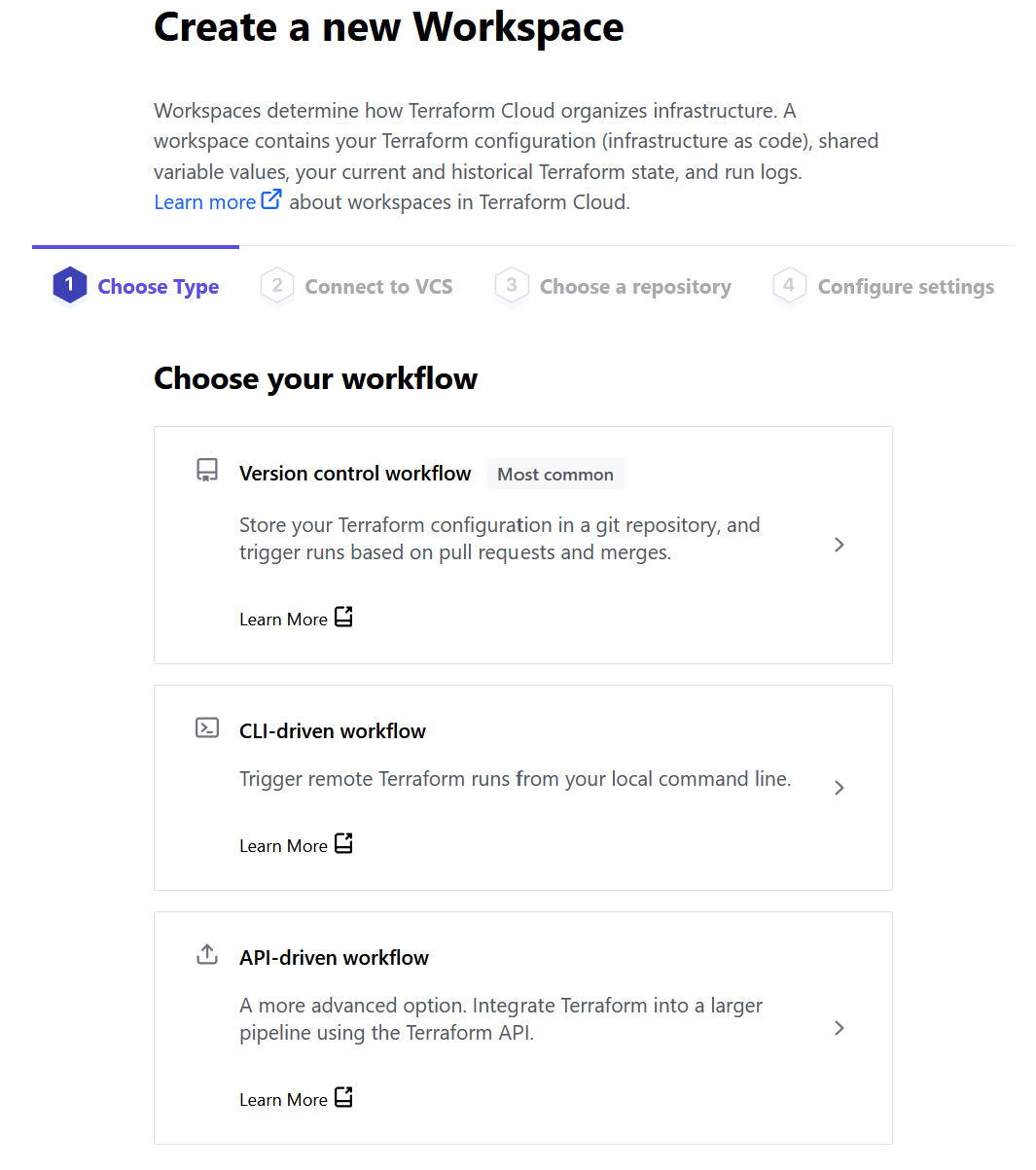

- Select how you'd like to trigger a workflow. To keep things simple, I chose Version control workflow

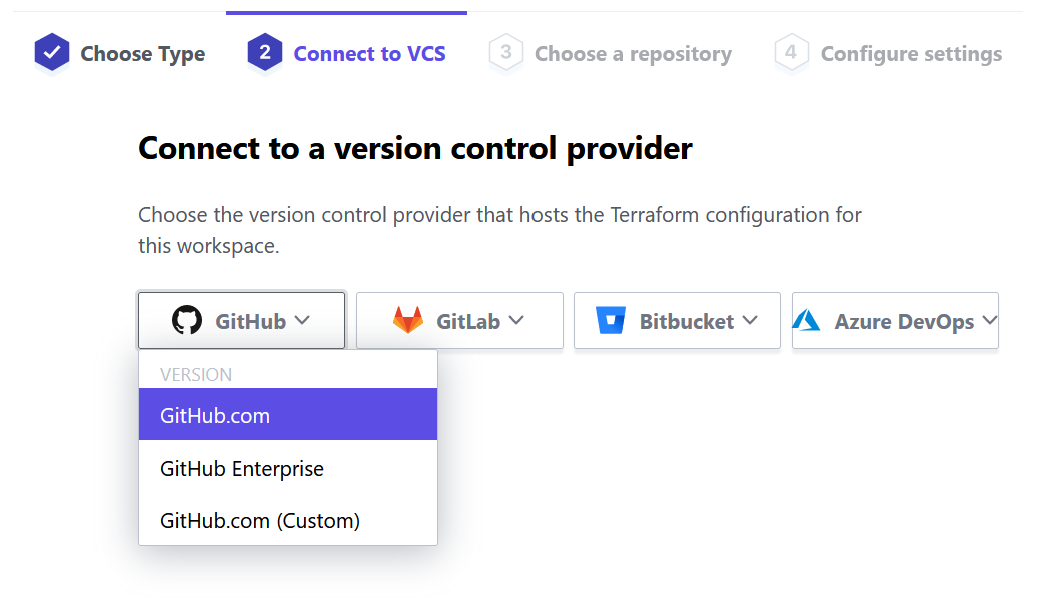

- Select the version control provider - Github.com.

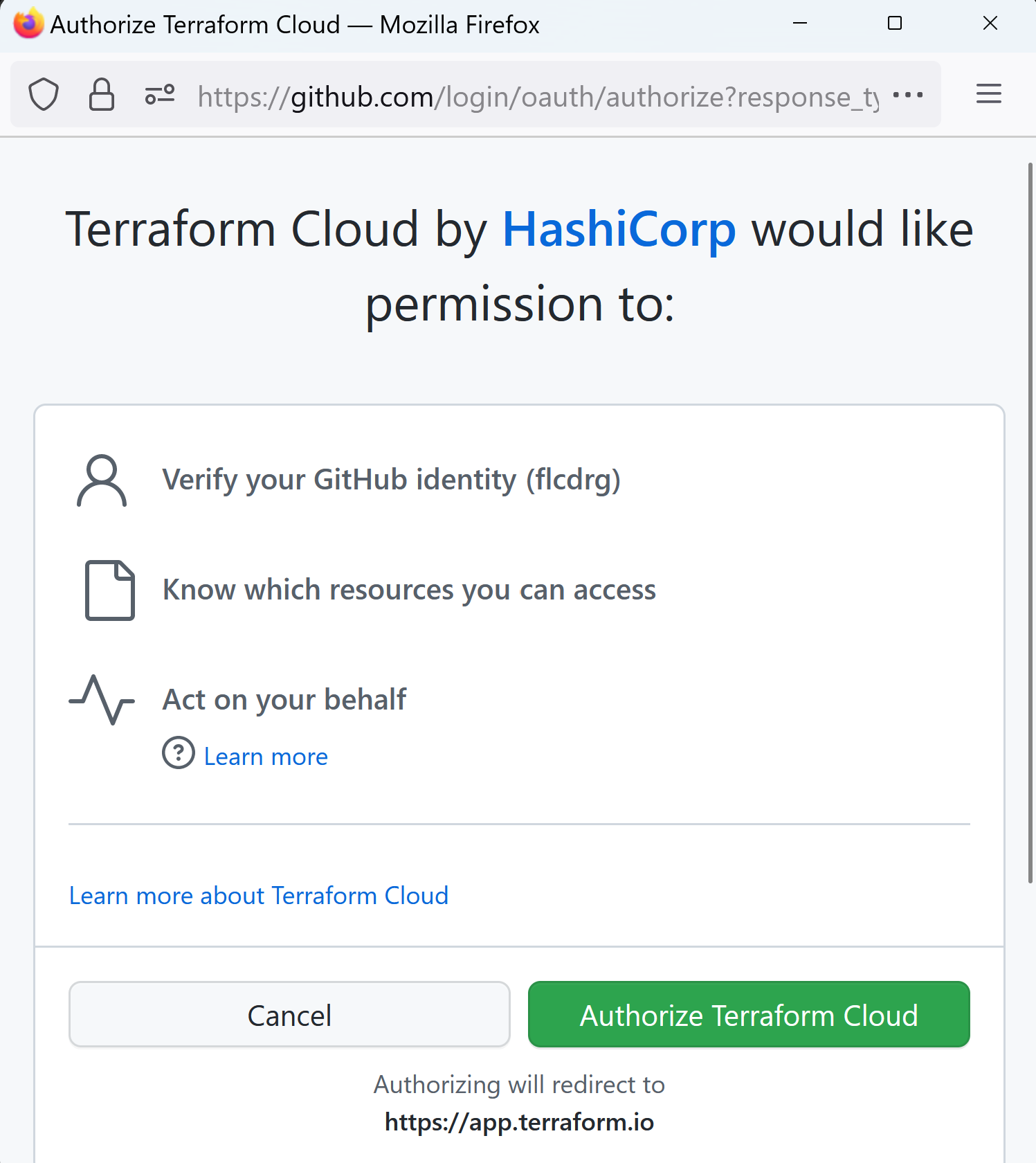

- You will now need to authenticate with GitHub.

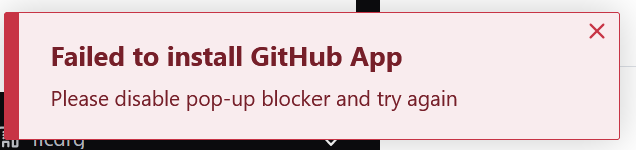

- Watch out if you get a notification about a pop-up blocker.

If you do, then enable pop-ups for this domain

If you do, then enable pop-ups for this domain

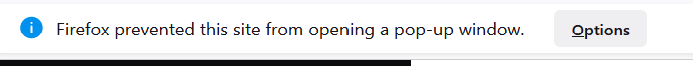

- Choose which GitHub account or organisation to use:

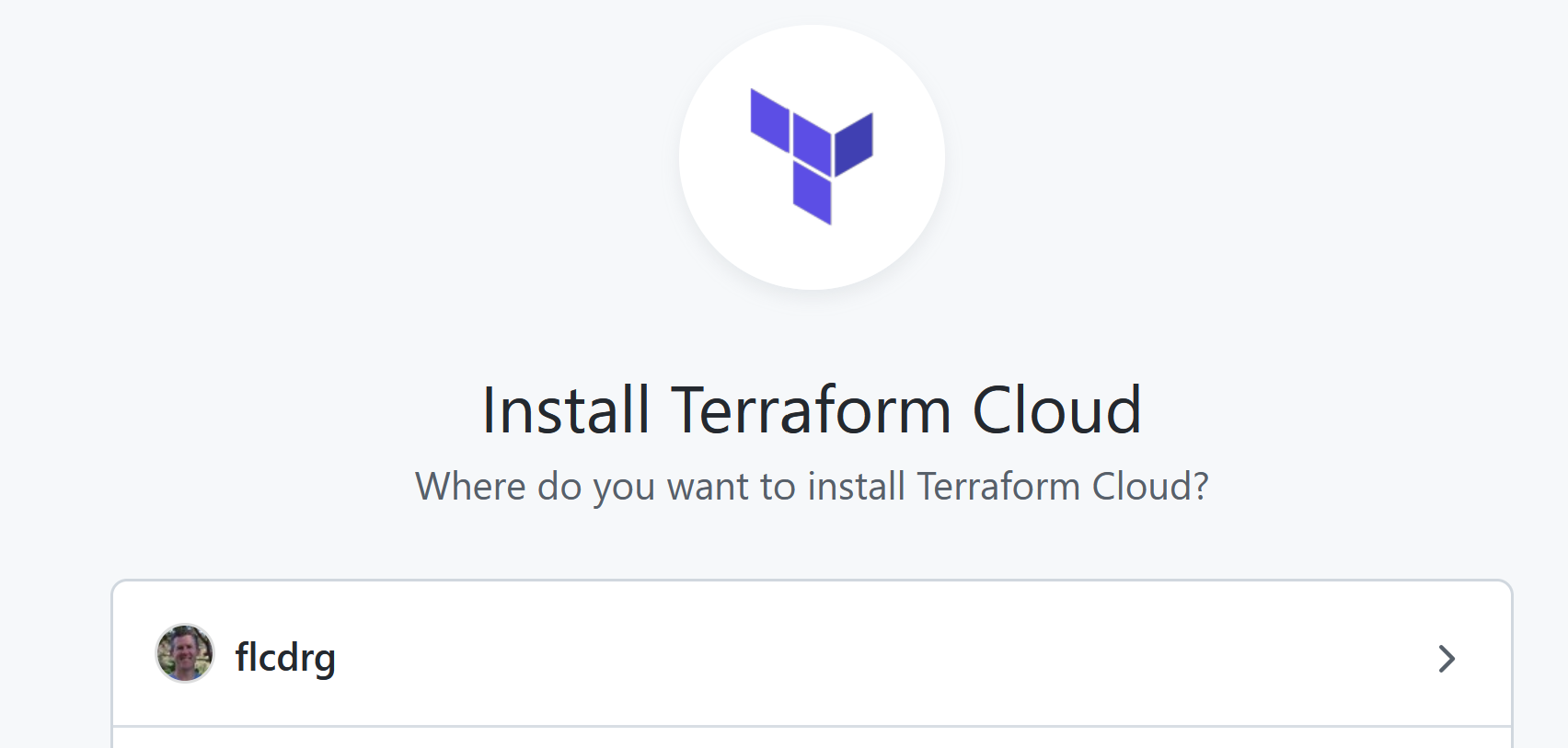

- Select which repositories should be linked to Terraform Cloud.

- If you use multi-factor authentication then you'll need to approve the access.

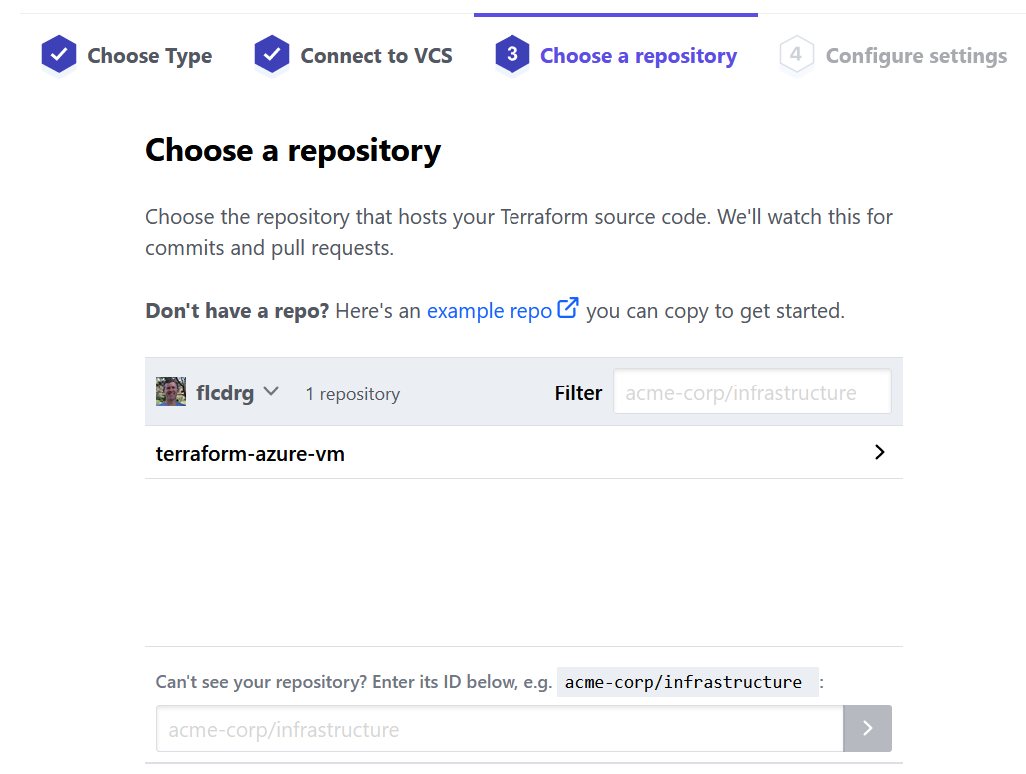

- Now that your GitHub repositories are connected, you need to select the repository that Terraform Cloud will use for this workspace.

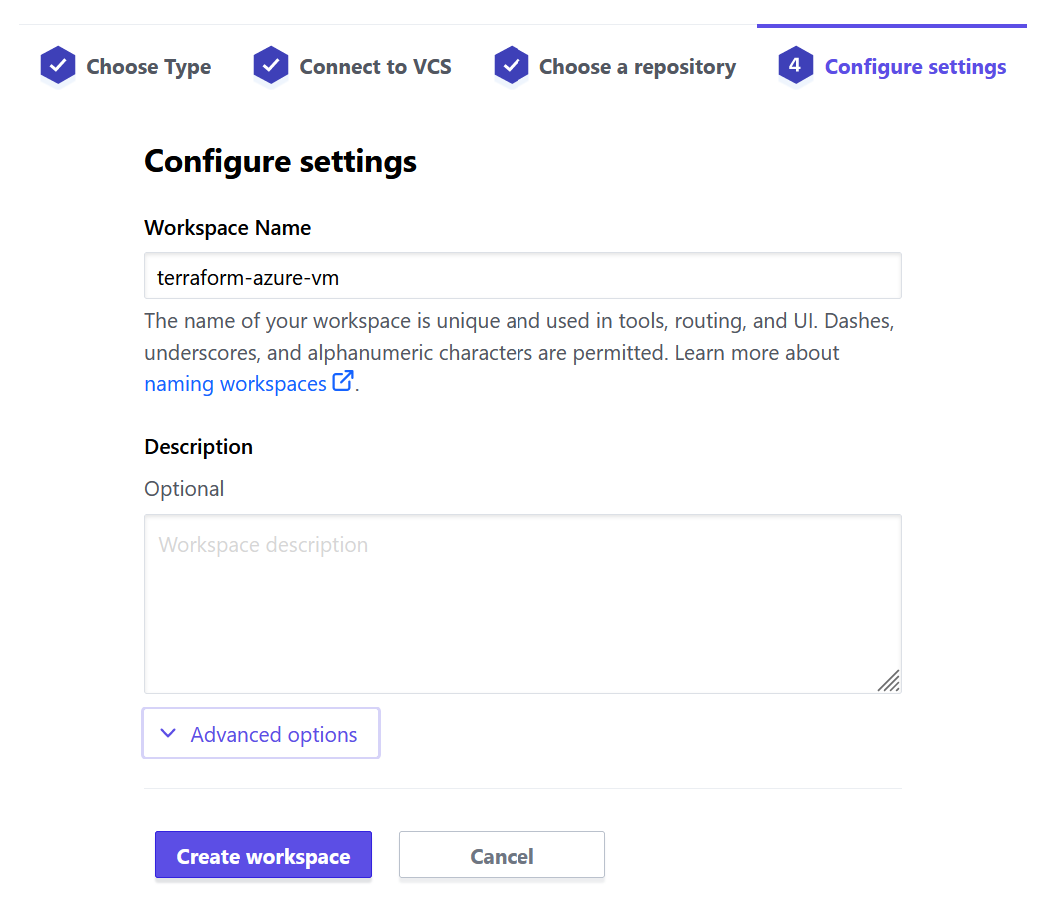

- Enter a workspace name (and optionally a description)

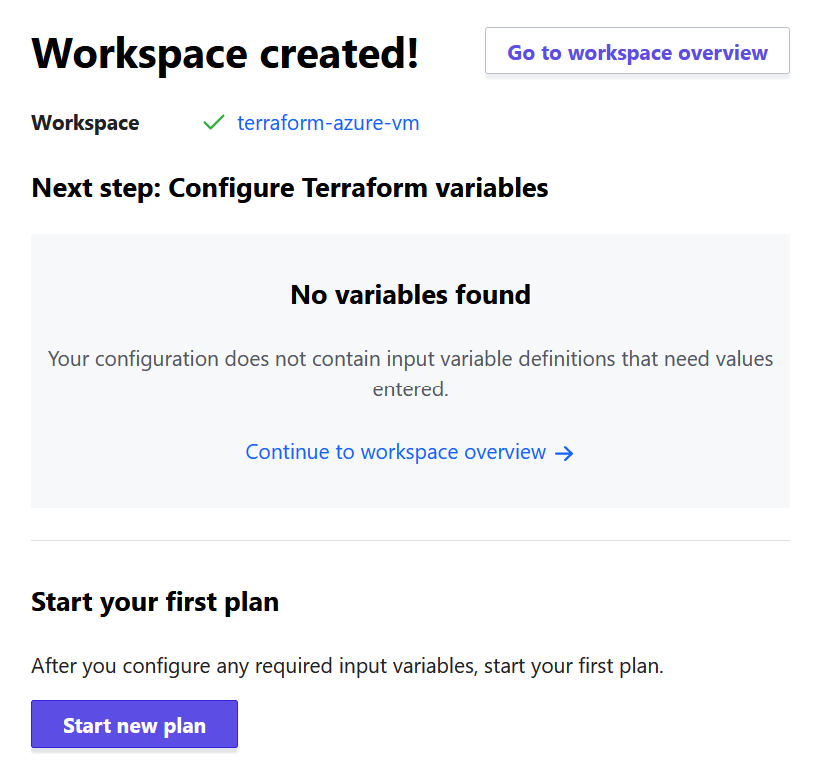

- Now your workspace has been created!

You're now ready to add Terraform files to your GitHub repository. I like to use the Terraform CLI to validate and format my .tf files before I commit them to version control.

After adding

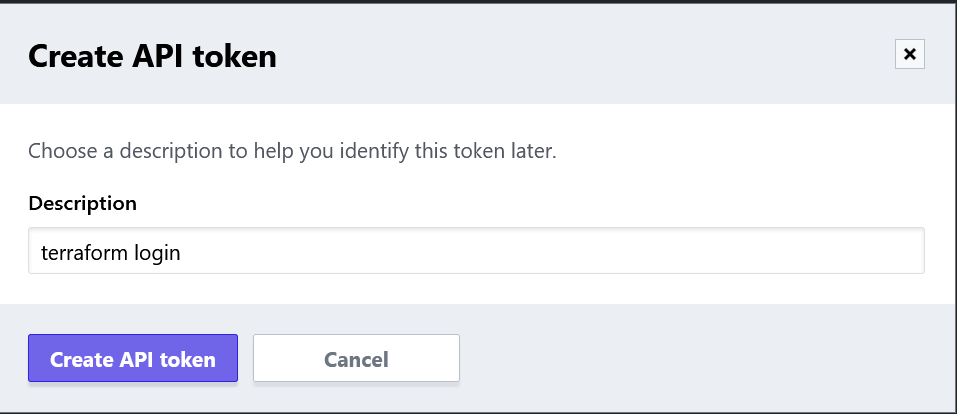

versions.tffile that contains aclouddefinition (along with any providers), you can runterraform loginterraform { cloud { organization = "flcdrg" hostname = "app.terraform.io" workspaces { name = "terraform-azure-vm" } } required_providers { azurerm = { source = "hashicorp/azurerm" version = "=3.39.1" } random = { source = "hashicorp/random" version = "3.4.3" } } } provider "azurerm" { features {} }A browser window will launch to allow you to create an API token that you can then paste back into the CLI.

The next thing we need to do is create an Azure service principal that Terraform Cloud can use when deploying to Azure.

In my case, I created a resource group and granted the service principal Contributor access to it (assuming that all the resources you want Terraform to create will live within that resource group). You could also allow the service principal access to the whole subscription if you prefer.

az login az group create --location westus --resource-group MyResourceGroup az ad sp create-for-rbac --name <service_principal_name> --role Contributor --scopes /subscriptions/<subscription_id>/resourceGroups/<resourceGroupName>Now go back to Terraform Cloud, and after selecting the newly created workspace, select Variables.

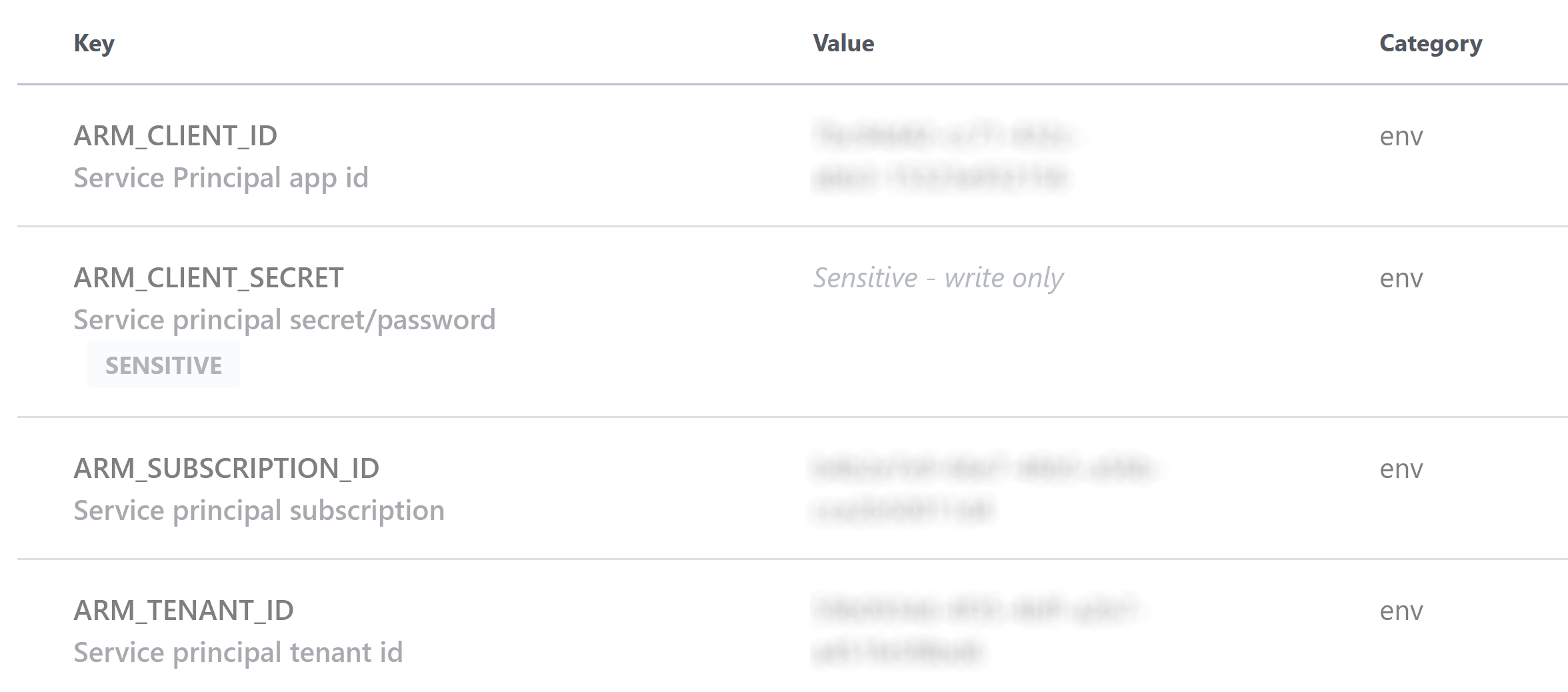

Under Workspace variables, click Add variable, then select Environment variables. Add a variable for each of the following (for

ARM_CLIENT_SECRETalso check the Sensitive checkbox), for the value copy the appropriate value from the output from creating the service principal:-

ARM_CLIENT_ID- appId -

ARM_CLIENT_SECRET- password -

ARM_SUBSCRIPTION_ID- id fromaz account show ARM_TENANT_ID

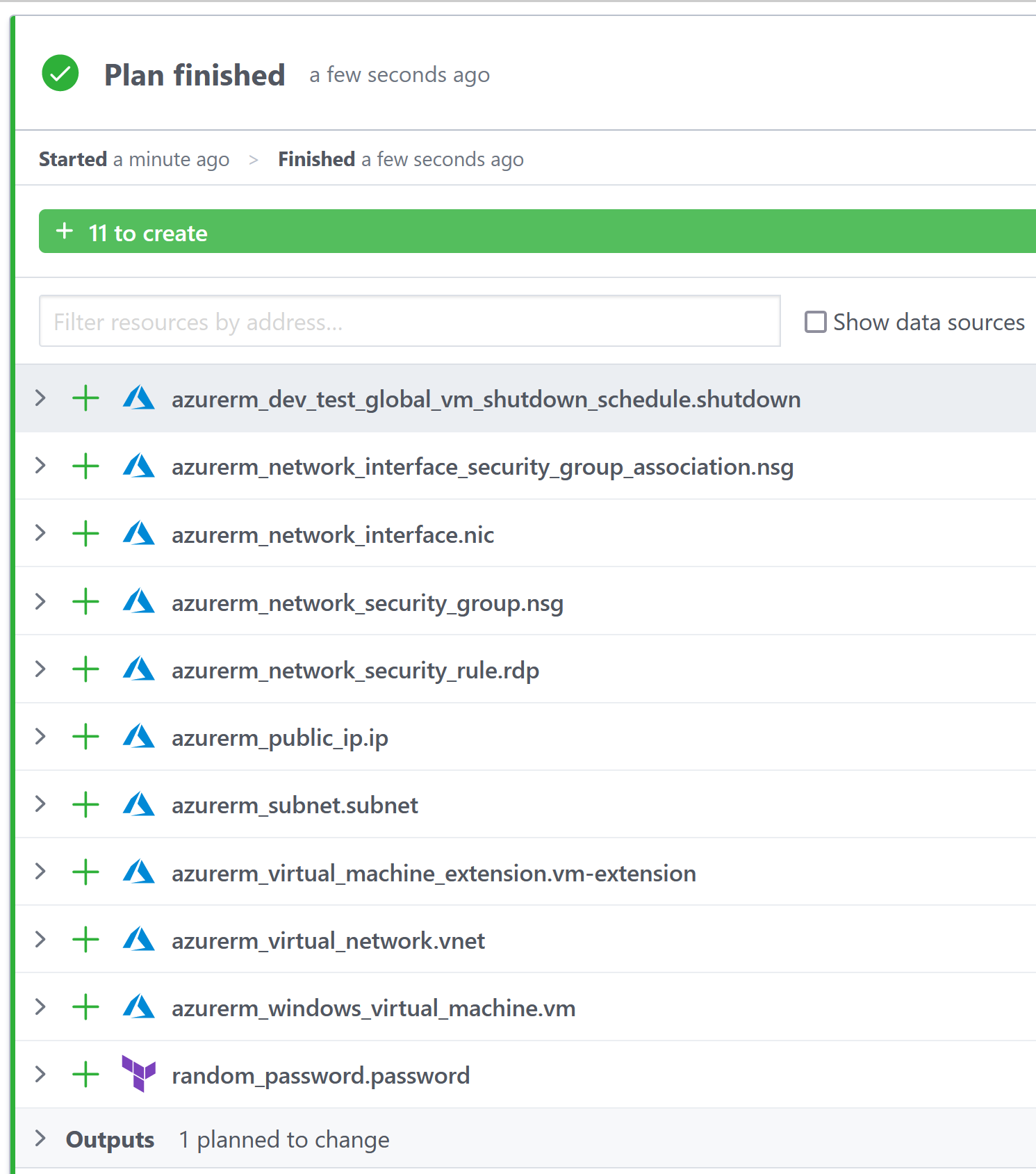

With those variables set, you can now push your Terraform files to the GitHub repository.

The Terraform Cloud workspace is configured to evaluate a plan on pull requests, and on pushes or merges to

mainit will apply those changes.

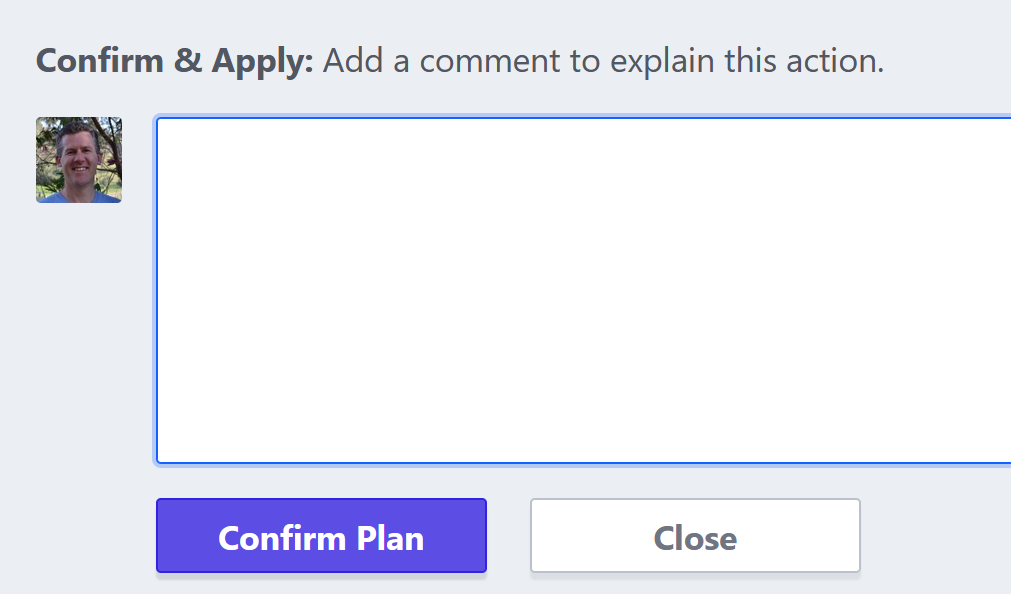

By default, you need to manually confirm before 'apply' will run (you can change the workspace to auto-approve to avoid this).

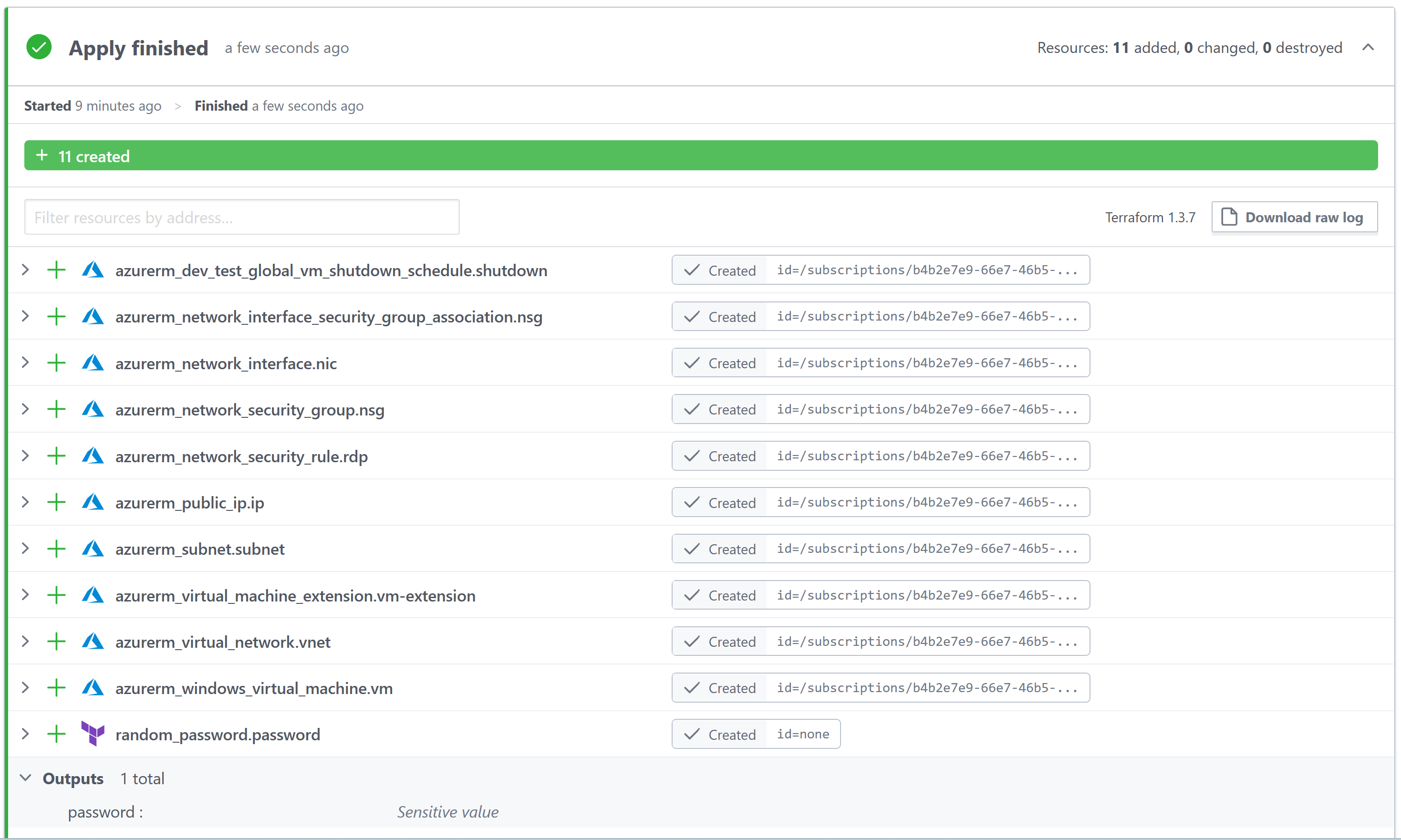

After a short wait, all the Azure resources (including the VM) should have been created and ready to use.

Virtual machine password

I'm not hardcoding the password for the virtual machine - rather I'm using the Terraform

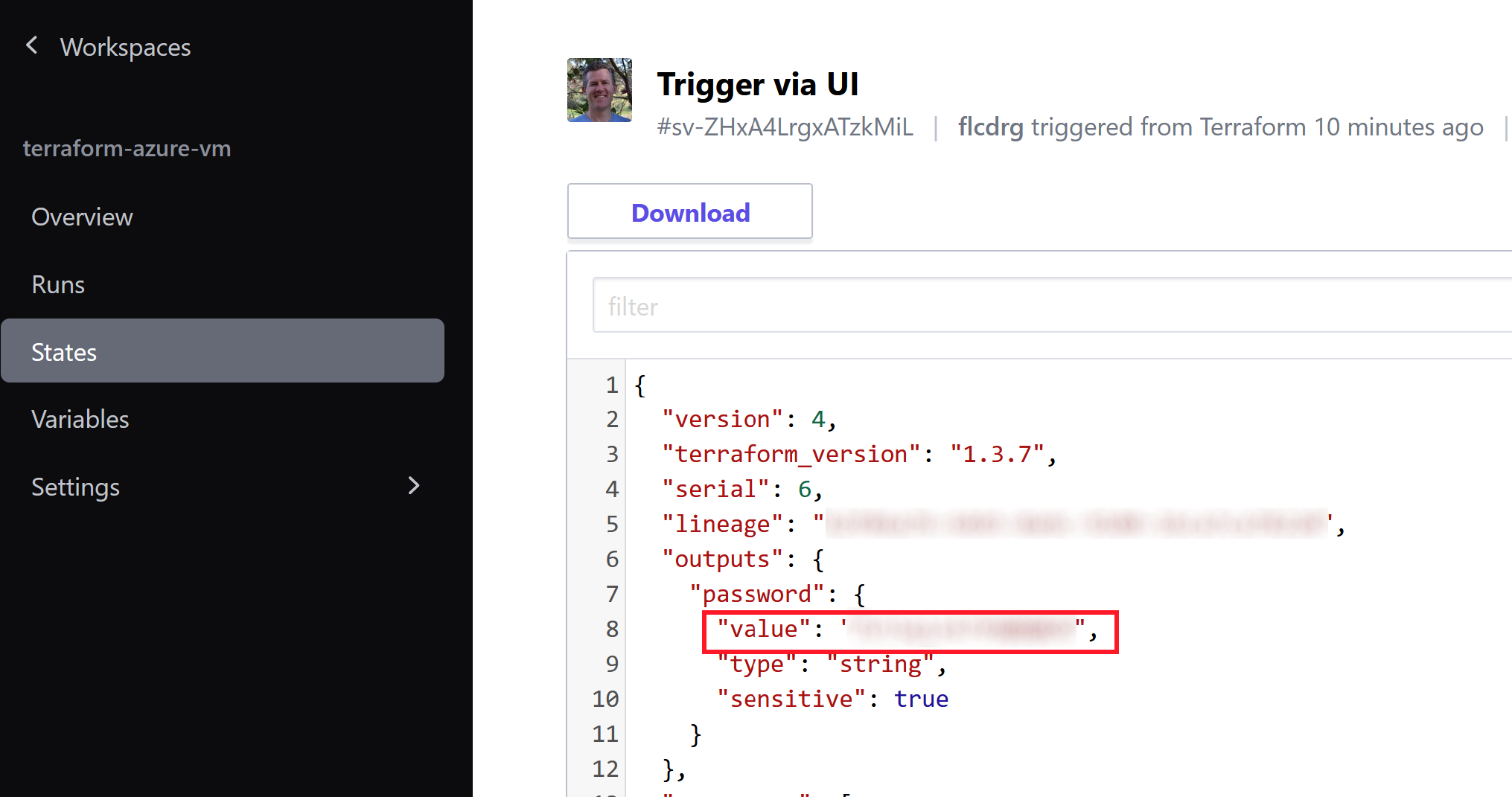

random_passwordresource to generate a random password. The password is not displayed in the logs as it is marked as 'sensitive'. But I will actually need to know the password so I can RDP to the VM. It turns out the password value is saved in Terraform state, and you can examine this via the States tab of the workspace.

With that, I'm now able to navigate to the VM resource in the Azure Portal and connect via RDP and do what I need to do.

If you wanted to stick with the CLI, you can also use Azure PowerShell to launch an RDP session.

Extra configuration

If you review the Terraform in the repo, you'll notice I also make use of the

azurerm_virtual_machine_extensionresource to run some PowerShell that installs Chocolatey. That just saves me from having to do it manually. If you can automate it, why not!Cleaning up when you're done

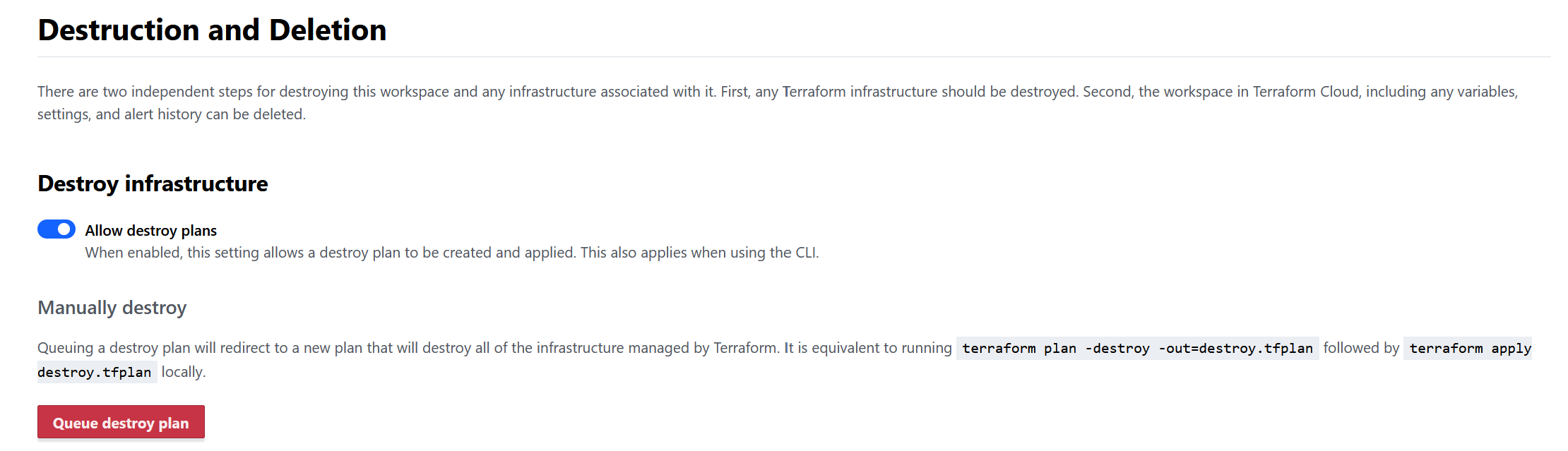

For safety, the virtual machine is set to auto shutdown in the evening, which will reduce any costs. To completely remove the virtual machine and any associated storage you can run a "destroy plan"

From the workspace, go to Settings, then Destruction and deletion, and click Queue destroy plan.

- Log in (or sign up) to Terraform Cloud at https://app.terraform.io, select (or create) your organisation, then go to Workspaces and click on Create a workspace

-

Looking back at 2022

As 2022 draws to a close, it's time for me to write my final blog post for the year.

It's been a bit of a mixed bag of a year. COVID restrictions in South Australia have eased, and yet COVID is still an ever-present threat. As far as I know, I've avoided it, but just this week two families I know have lost family members. Such a terrible tragedy. Can I ask that you continue to take precautions to reduce the risk to both yourself and your loved ones. Too often it is those already doing it tough who are impacted the most by this pandemic.

My involvement with video streaming at my local church continues. I enjoy being a camera operator, but I've also taken on a 'producer' role, keeping an eye on the whole service, filling in and troubleshooting as necessary. To contrast those 'behind the scenes' roles, on Christmas Day I took on something new by singing up front!

SevenFold (my band) did a few gigs this year. I do love making music with my friends. I'm looking forward to continuing that in the new year.

On the health front, there have been some challenges for my family. Not to be left out, I had my own face vs. bike path effort. Some minor broken bones in my face, but fortunately no surgery was necessary. It did take quite a few months to start feeling normal again and get back on the bike. A useful reminder that a) I'm getting older and b) I'm not bulletproof.

When not falling off my bike, I've managed to get a few local rides in, as well as the occasional ride with my Dad and the folks from Mud, Sweat and Gears down to McLaren Vale. More frequently I've been taking a walk around the neighbourhood most mornings before starting work. It's good to get some fresh air, stretch my legs, and keep up with my podcasts.

Work at SixPivot is going well. I continue to be impressed by the thoughtful leadership and am feeling quite at home. It was so great to finally meet almost all my colleagues in person at the SixPivot Summit. Our Adelaide team has grown from 2 to 5, and it was great to gather together for a Christmas celebration a few weeks ago.

From a technical perspective, I've been spending a bit more time lately on the 'DevOps' side of things. Improving build and deployment automation, and digging deeper into infrastructure with Azure.

I was renewed as a Microsoft MVP. Something I don't take for granted, and try to leverage to the advantage of our local .NET user group. I'm keenly anticipating news of the next Microsoft MVP Summit. Will it be virtual? Will it be back in person? Hopefully they announce their plans soon.

Speaking of our local .NET User Group, the Adelaide .NET User Group resumed meeting in person for most of the year, though we did have some online-only meetings when necessary. DDD Adelaide is still on hiatus, but I'm hopeful that we might be able to build a new organising team to run a conference later in 2023. It has been encouraging to see both Perth and Brisbane resume their conferences. Maybe in 2023 I might get to visit some of the interstate events. (I was hoping to get to Brisbane this year, but it ended up being the same weekend as a friend's wedding). There's always next time.

Weather-wise, it felt like a very wet Winter and Spring. The garden had been so green, but now we've had a few hot days so it does seem like Summer is finally here. It's funny how quickly you go from waiting for the rain to go away, to now hoping for a bit of rain to water the garden!

It is nice to have some time off over the Christmas break. I did have plans to go cycling almost every day and do lots of jobs around the house. That hasn't quite eventuated, but it is good to just pause and take a breath. I'll have another week off later in January to spend time with visiting family.

I pray 2022 finishes well for you, and that whatever situations we find ourselves in 2023, there are moments of hope and joy for us all.

-

Important changes for Azure DevOps Pipeline agents and GitHub Actions runners

I don't think this has been publicised as widely as it should, especially for Azure Pipelines consumers.

ubuntu-latestis now resolving toubuntu-22.04rather thanubuntu-20.04.This has been mentioned in the GitHub blog and there is an 'announcement' issue in the runner-images repo, but I haven't seen anything official for Azure DevOps/Azure Pipelines. Both services use the same agent virtual machine images. As of this writing, the Microsoft-hosted agent page still suggests that

ubuntu-20.04is used.This issue summarises the software differences between 20.04 and 22.04, and they can be significant. For example, I've already seen builds failing because they were assuming a .NET Core 3.1 SDK was preinstalled. The 22.04 image only includes .NET 6 and 7.

This is in addition to the already announced removal of

ubuntu-18.04.So make sure you declare all your tool requirements in your pipelines. Even better, run your jobs in a container (Azure Pipelines or GitHub Actions) with a custom container image that precisely specifies the tools and versions required to build and deploy your application.