-

Why is hardware so hard?

I'm looking to refresh my home office computer hardware. In summary, it's doing my head in!

I guess I'm mostly a software guy, so selecting hardware (and trying to ensure that my selections are going to be compatible) seems to take far longer than I'd like. I know it's a simplification, but I wish hardware was more like Lego. You're confident that different kits, even years apart in manufacturing, are still going to work together.

You only have to go searching vendor support forums to read numerous posts of people struggling to get hardware that should work playing nice together. Docks seem to be a sore point here. I've seen first hand how troublesome those can be, even with the laptop and dock from the same vendor.

The desktop PC I've been using is being decommissioned and I want to replace it with a new laptop.

Almost 5 years ago I bought an Dell XPS 15 9550 (with my own money). It's an Intel i7 6700HQ with 16GB RAM, 512 SSD and a 4K (3840x2160) touch screen. I'd like my new device to be at least on par with this.

My shopping list:

- Intel Core i7

- 32GB RAM

- 512-1TB M.2 SSD

Trying to spec out a new laptop is hard. Tick all the boxes and then see the price, then pick myself up off the floor and try again...

— David Gardiner (@DavidRGardiner) January 15, 2021Chris Walsh came out of left field suggesting the new Apple M1 hardware could be worth considering, though Jeff Wilcox suggested waiting for the next models. I've never owned any MacOS / OS X hardware, and I am hearing good things about the new M1 stuff, but the timing isn't quite right to be making a big platform jump like that right now.

I'm also thinking this is a chance to update my displays. I currently have 3 Full HD (1920x1080) displays, and two of those are at least 6 years old. I didn't realise how poor the colour/contrast was on them until one broke on the journey moving from the office to working from home, and I had to replace it with a relatively cheap newer model. But now 4K is a thing, so could I run 1, 2 or even all 3 4K monitors?

But how do you run more than a single 4K monitor? Probably with a dock of some kind, but does the dock use Thunderbolt, USB-C or DisplayLink?

Apparently you can run 2 4K displays if you have Thunderbolt 4. That sounds useful, except Thunderbolt monitors are really expensive (and that's just the Thunderbolt 3 ones, not even TB 4).

I called out on Twitter, asking about docking stations:

Looking for dock recommendations that runs 2x4K + 1xHD monitors. Does it matter between USB-C or Thunderbolt?

— David Gardiner (@DavidRGardiner) January 14, 2021and to summarise the replies:

- Simon Waight uses a Targus Universal DV4K Docking Station

- Bill Chesnut has also heard good things about the Targus, but personally uses the Dell Dock WD19 180W

- Greg Low and Adam Fowler had good experience with Lenovo docks. eg. Lenovo ThinkPad Thunderbolt 3 Dock Gen 2

- Corneliu Tusnea uses the Pluggable USB-C 4K Triple Display Docking Station

and Chris Walsh also pointed out that Thunderbolt is mandatory if you want to drive more than one 4K monitor.

I'm leaning towards another Dell laptop and might as well go with the Dell WD19TB dock. I thought it would be wise to review the WD19TB supported resolutions. That mentions DisplayPort 1.4.

I had been looking at some 4K monitors, but most of those only support DisplayPort 1.2. Time to do some more reading up on what is the difference between DisplayPort 1.2 and 1.4. Not surprisingly, 1.4 is better, but is 1.2 good enough for my needs?

If I have a laptop that supports DisplayPort 1.4, does the dock and display also need 1.4 or is 1.2 ok. Another question posted, this time to the Dell Community forums, and a few hours later I got some helpful responses. It sounds like I should be fine. Just as well, as there's hardly any docks around that support DisplayPort 1.4, and likewise the only monitors I could find were the pricey top-end models.

That 'supported resolution' table for the WD19TB dock didn't list '2xDP and 1xHDMI' as an option for 3 monitors (though it did have '2xDP and 1xUSB-C'). Could I use a HDMI port on the laptop (instead of the dock)? Maybe, or the other option is to use a USB-C to HDMI adapter. Turns out even K-Mart have those for $10!. I later realised that I actually have one of those already in the form of a Dell DA200 USB-C Multi-Port Adapter that I'd bought to use with my XPS 9550. It was sitting on the desk right in front of me the whole time🤣.

Hopefully that's the display stuff sorted. Then there's storage. Are you fine with the stock SSD, or do you upgrade. You might be better ordering with the smallest drive and then replacing it with a larger (faster?) 3rd party SSD. But if you do that, have you got a way to migrate your data to the new disk (or don't you care). More things to consider.

Anyway, I think I've made some progress. So, a big thanks to the community for advice and suggestions (though let me know if there's anything else I should consider). Now to put together a final selection and make the order!

-

Holiday learning

I'm in the middle of 3 weeks of annual leave. It's great to just put work aside for a bit and take time to unwind. I've been out walking, cycling, drying apricots and making apricot jam, catching up with friends and family, amongst other things.

I'm in the middle of 3 weeks of annual leave. It's great to just put work aside for a bit and take time to unwind. I've been out walking, cycling, drying apricots and making apricot jam, catching up with friends and family, amongst other things.I thought I'd use some of my time off get more familiar with Azure and GitHub and have been pleasantly surprised by the learning materials available over at docs.microsoft.com.

Learning content is organised in modules - these are self-contained units of work. Modules might be grouped together in a 'Learning Path'. Some content relates to specific Microsoft exams, so if you're interested in gaining a specific certification you can work back from the exam requirements to help ensure you've covered all the areas.

eg. To achieve the Microsoft Certified: DevOps Engineer Expert certification, after you've achieved one of the associate pre-requisites, you then need to pass Exam AZ-400: Designing and Implementing Microsoft DevOps Solutions.

One of the learning paths for this exam is AZ-400: Manage source control.

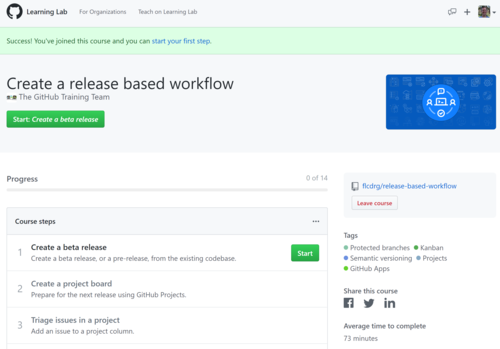

In that learning path is the module Manage software delivery by using a release based workflow on GitHub.

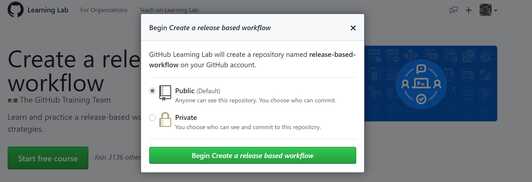

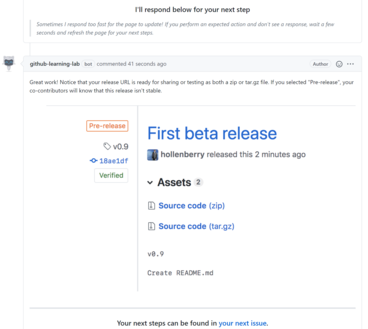

The GitHub-related content usually has an overview and some introductory content. Then they include a practical exercise which actually uses GitHub. These are cleverly done by automatically cloning a repository into your own GitHub account, and then stepping you through using GitHub issues (and sometimes pull requests) with automated responses updating the issues or moving you to the next step once you've performed the necessary steps. It's really quite clever!

Now the repository has been created, you can click on Start to begin the process. As you complete each step it will be marked as complete (so you can come back to finish the exercise later if you don't finish it in one sitting)

There's some instructions to follow. I found it's easiest to right-click on the link to open it in another tab, follow the instructions..

and then come back to this tab to wait for the next bit (which will be added as a comment to the issue)

Then click on the link to the next issue to follow on with the next step.

At the conclusion of the exercise, you head back to the docs site for a knowledge check with a multiple choice quiz to check that you've understood the main concepts for the module, and then you're done!

Some modules covered concepts I was already familiar with so if I felt the practical exercise didn't contain anything new then I'd just skip directly to knowledge check.

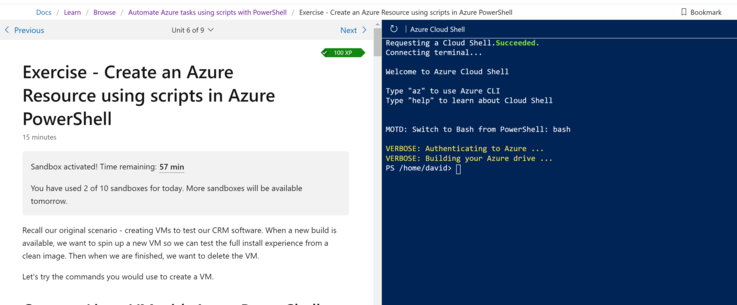

Azure learning modules are similar, except that instead of using GitHub, they often including access to temporary Azure resources. Some modules might embed an Azure cloud shell right in your browser on the same page as the instructions. Others will ask you to log in to the Azure Portal so you can follow through creating or manipulating resources there.

You'll be asked to active the sandbox (and probably will need to review permissions)

Here you can see the "Microsoft Learn Sandbox" subscription, which is used for the learning activities. It is only a temporary subscription and will disappear after a few hours, and more importantly means any resources used there don't cost you anything.

Here's an example of a page using the Azure Cloud Shell. Many modules wil use bash, but this specific example is using PowerShell:

The modules will often check your work to confirm that you've followed the instructions correctly:

Also worth mentioning if you'd like some free instructor-led training then check out the Microsoft Azure Virtual Training Day: Fundamentals that are are being run during January and February. As a bonus, attendees will be eligible to take the Microsoft Azure Fundamentals certification exam for free! (Credit to Bronwen Zande for tweeting about this)

So if you're looking to upskill, or just deepen your knowledge of Azure and GitHub then now is a great time to dive in.

8-Jan-2021 - Added screenshot of Azure cloud shell

-

You're in control. Farewell 2020

It's New Year's Eve 2020. This year (and what a year) is almost done. Tomorrow we start over with the start of 2021!

One thing I wanted to talk about as we finish up 2020 is 'taking control', specifically with social media.

It is easy to coast, to be just a passive consumer. I know I often find myself doing it. But I think that can be risky. One day you discover you're completely swamped, maybe even drowning with all this stuff. That's not good!

Social media is a tool. It can be great - keeping in touch with friends and conversing with people you might otherwise never have the opportunity to meet in real life. But it can also be misused and be a negative, destructive influence. Not to mention the agendas and commercial of the social media platforms themselves. Remember nothing is free. There's always a cost. Someone always ends up paying.

Be deliberate

Be intentional about what you're sharing and what you're consuming. I know it's easy to just post whatever comes to mind. Maybe before you hit Post, take 5 minutes, then come back and review it with fresh eyes. Do I really need to post this? Is this constructive, helpful, building people up? Great! No? Maybe there's a better way to express yourself. Are you (or your current/future employer) going to be comfortable re-reading this in a few months or years?

Be careful

Do you really want to share your location/GPS coordinates? Most platforms do a good job of stripping these from photo uploads, but I know with my own blog I need to do that manually.

Are friends ok with being tagged or shared in a photo?

Delete

Is a particular social platform causing you stress? Deleting your profile (or even just taking a break for a few days) might be helpful. If you do choose to delete, it might be worth downloading all your data first.

Remember, this is social media - not real life! Real friendship exists beyond any particular technology platform.

Block

Sometimes you follow or friend someone who turns out to be a bit of a 'froot loop'. There's no rule to say you have to keep following or stay connected. Delete the connection. If they're pestering you, block them.

Mute

Maybe they're not a complete froot loop, but going through an odd phase. Many social platforms allow you to mute someone. You can still stay connected, but you won't see their posts.

Filter

Some platforms allow you to define keywords that you want to exclude from your news feed. If there's words you find offensive or topics you don't care about, use this feature.

Take control. Make healthy choices. Stay safe. Happy New Year and all the best for 2021!🙂🎆🧨🎇